Apache NiFi notes

Russell Bateman

January 2016

last update:

|

Apache NiFi notes

|

(See A simple NiFi processor project for minimal source code and pom.xml files.)

Managing templates in NiFi 1.x. Go here for the best illustrated guide demonstrating Apache NiFi templates or go here: NiFi templating notes.

(How to get NiFi to... work (unsecurely) as before for personal use.)

PrefaceThe notes are in chronological order, as I needed and made them. I haven't yet attempted to organize them more usefully. The start happens below under Friday, 15 January 2016. According to Wikipedia, NiFi originated at the NSA where it originally bore the name, Niagarafiles (from Niagara Falls). It was made open source, then picked up for continued development by Onyara, Inc., a start-up company later acquired by Hortonworks in August, 2015. NiFi has been described as a big-data technology, as "data in motion," in opposition to Hadoop, which is "data at rest." Apache NiFi is the perfect tool for performing extract, transfer and load (ETL) of data records. It is a somewhat low-code environment and IDE for developing data pipelines. The principal NiFi developer documentation is cosmetically beautiful, however, it suffers from being written very much by someone who already thoroughly understands his topic without the sensitivity that his audience do not. Therefore, it's a great place to go to as a reference, but rarely as a method. One can easily become acquainted with the elements of what must be done, but rarely if ever know how to write the code to access those elements let alone the best way to do it. For method documentation, a concept in language learning, which often only appears for technology such as NiFi long after the fact in our industry, you'll need to look fort the "how-to" stuff in the bulleted list below, as well as in my notes here. |

NiFi 1.x UI overview and other videos |

My goal in writing this page is a) to record what I've learned for later reference and b) to leave breadcrumbs for others like me.

In my company, we were doing extract, load and transfer (ETL) by way of a hugely complicated and proprietary monolith that was challenging to understand. I had proposed beginning to abandon it in favor of using a queue-messaging system, something I was very familiar with. A few months later, a director discovered NiFi and put my colleague up to looking into it. Earlier on, I glanced at it, but was swamped on a project and did not pursue it until after my colleague had basically adopted and promoted it as a replacement for our old ETL process. It only took us a few weeks to a) create a shim by which our existing ETL component code became fully exploitable and b) spin up a number of NiFi-native components including ports of some of the more important, legacy ones. For me, the road has been a gratifying one: I no longer need to grok the old monolith and can immediately contribute to the growing number of processors to do very interesting things. It leveled my own playing field as a newer member of staff.

Stuff I don't have to keep in the pile that's my brain because I just look here (rather than deep down inside these chronologically recorded notes):

@Test

public void test() throws Exception

{

TestRunner runner = TestRunners.newTestRunner( new ExcerptBase64FromHl7() );

final String HL7 =

"MSH|^~\\&|Direct Email|XYZ|||201609061628||ORU^R01^ORU_R01|1A160906202824456947||2.5\n"

+ "PID|||000772340934||TEST^Sandoval^||19330801|M|||1 Mockingbird Lane^^ANYWHERE^TN^90210^||\n"

+ "PV1||O\n"

+ "OBR||62281e18-b851-4218-9ed5-bbf392d52f84||AD^Advance Directive"

+ "OBX|1|ED|AD^Advance Directive^3.665892.1.238973.3.1234||^multipart^^Base64^"

+ "F_ABCDEFGHIJKLMNOPQRSTUVWXYZ";

// mock up some attributes...

Map< String, String > attributes = new HashMap<>();

attributes.put( "attribute-name", "attribute-value" );

attributes.put( "another-name", "another-value" );

// mock up some properties...

runner.setProperty( ExcerptBase64FromHl7.A_STATIC_PROPERTY, "static-property-value" );

runner.setProperty( "dynamic property", "some value" );

// add a dynamic property...

runner.setValidateExpressionUsage( false );

runner.setProperty( "property-name", "value" );

// call the processor...

runner.setValidateExpressionUsage( false );

runner.enqueue( new ByteArrayInputStream( HL7.getBytes() ), attributes );

runner.run( 1 );

runner.assertQueueEmpty();

// gather the results...

List< MockFlowFile > results = runner.getFlowFilesForRelationship( ExcerptBase64FromHl7.SUCCESS );

assertTrue( "1 match", results.size() == 1 );

MockFlowFile result = results.get( 0 );

String actual = new String( runner.getContentAsByteArray( result ) );

assertTrue( "Missing binary content", actual.contains( "1_ABCDEFGHIJKLMNOPQRSTUVWXYZ" ) );

// ensure the processor transmitted the attribute...

String attribute = result.getAttribute( "attribute-name" );

assertTrue( "Failed to transmit attribute", attribute.equals( "attribute-value" ) );

}

Here is a collection of some NiFi notes...

Update, February 2017: what's the real delta between 0.x and 1.x? Not too much until you get down into gritty detail, something you won't do when just acquainting yourself.

Now I'm looking at:

Unsuccessful last Friday, I took another approach to working on the NiFi example from IntelliJ IDEA. By no means do I think it must be done this way, however, Eclipse was handy for ensuring stuff got set up correctly. The article assumes much about setting up a project.

The pom.xml. There are several ultra-critical relationships to beware of.

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0

http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>rocks.nifi</groupId>

<artifactId>examples</artifactId> <!-- change this to change project name -->

<version>1.0-SNAPSHOT</version>

<packaging>nar</packaging>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.7</maven.compiler.source>

<maven.compiler.target>1.7</maven.compiler.target>

<nifi.version>0.4.1</nifi.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.nifi</groupId>

<artifactId>nifi-api</artifactId>

<version>${nifi.version}</version>

</dependency>

<dependency>

<groupId>org.apache.nifi</groupId>

<artifactId>nifi-utils</artifactId>

<version>${nifi.version}</version>

</dependency>

<dependency>

<groupId>org.apache.nifi</groupId>

<artifactId>nifi-processor-utils</artifactId>

<version>${nifi.version}</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-io</artifactId>

<version>1.3.2</version>

</dependency>

<dependency>

<groupId>com.jayway.jsonpath</groupId>

<artifactId>json-path</artifactId>

<version>1.2.0</version>

</dependency>

<dependency>

<groupId>org.apache.nifi</groupId>

<artifactId>nifi-mock</artifactId>

<version>${nifi.version}</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.10</version>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.nifi</groupId>

<artifactId>nifi-nar-maven-plugin</artifactId>

<version>1.0.0-incubating</version>

<extensions>true</extensions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.15</version>

</plugin>

</plugins>

</build>

</project>

<!-- vim: set tabstop=2 shiftwidth=2 expandtab: -->

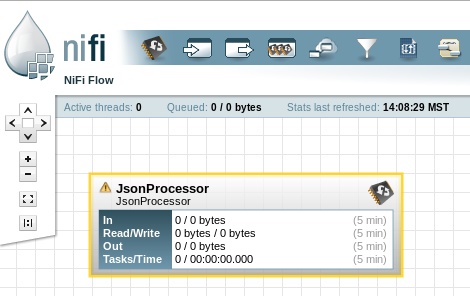

Of course, once you've also created JsonProcessor.java at the end of the package created, you just do as the article says. You should end up with:

~/dev/nifi/projects/json-processor $ tree . ├── pom.xml └── src ├── main │ ├── java │ │ └── rocks │ │ └── nifi │ │ └── examples │ │ └── processors │ │ └── JsonProcessor.java │ └── resources │ └── META-INF │ └── services │ └── org.apache.nifi.processor.Processor └── test └── java └── rocks └── nifi └── examples └── processors └── JsonProcessorTest.java 16 directories, 4 files

The essential is:

The IntelliJ IDEA project can be opened as usual in place of Eclipse. Here's success:

See NiFi Developer's Guide: Processor API for details on developing a processor, what I've just done. Importantly, this describes

Here's an example from NiFi Rocks:

package rocks.nifi.examples.processors;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.util.ArrayList;

import java.util.Collections;

import java.util.HashSet;

import java.util.List;

import java.util.Set;

import java.util.concurrent.atomic.AtomicReference;

import com.jayway.jsonpath.JsonPath;

import org.apache.commons.io.IOUtils;

import org.apache.nifi.annotation.behavior.SideEffectFree;

import org.apache.nifi.annotation.documentation.CapabilityDescription;

import org.apache.nifi.annotation.documentation.Tags;

import org.apache.nifi.components.PropertyDescriptor;

import org.apache.nifi.flowfile.FlowFile;

import org.apache.nifi.logging.ProcessorLog;

import org.apache.nifi.processor.AbstractProcessor;

import org.apache.nifi.processor.ProcessContext;

import org.apache.nifi.processor.ProcessSession;

import org.apache.nifi.processor.ProcessorInitializationContext;

import org.apache.nifi.processor.Relationship;

import org.apache.nifi.processor.exception.ProcessException;

import org.apache.nifi.processor.io.InputStreamCallback;

import org.apache.nifi.processor.io.OutputStreamCallback;

import org.apache.nifi.processor.util.StandardValidators;

/**

* Our NiFi JSON processor from

* http://www.nifi.rocks/developing-a-custom-apache-nifi-processor-json/

*/

@SideEffectFree

@Tags( { "JSON", "NIFI ROCKS" } ) // for finding processor in the GUI

@CapabilityDescription( "Fetch value from JSON path." ) // also displayed in the processor selection box

public class JsonProcessor extends AbstractProcessor

{

private List< PropertyDescriptor > properties; // one of these for each property of the processor

private Set< Relationship > relationships; // one of these for each possible arc away from the processor

@Override public List< PropertyDescriptor > getSupportedPropertyDescriptors() { return properties; }

@Override public Set< Relationship > getRelationships() { return relationships; }

// our lone property...

public static final PropertyDescriptor JSON_PATH = new PropertyDescriptor.Builder()

.name( "Json Path" )

.required( true )

.addValidator( StandardValidators.NON_EMPTY_VALIDATOR )

.build();

// our lone relationship...

public static final Relationship SUCCESS = new Relationship.Builder()

.name( "SUCCESS" )

.description( "Succes relationship" )

.build();

/**

* Called at the start of Apache NiFi. NiFi is a highly multi-threaded environment; be

* careful what is done in this space. This is why both the list of properties and the

* set of relationships are set with unmodifiable collections.

* @param context supplies the processor with a) a ProcessorLog, b) a unique

* identifier and c) a ControllerServiceLookup to interact with

* configured ControllerServices.

*/

@Override

public void init( final ProcessorInitializationContext context )

{

List< PropertyDescriptor > properties = new ArrayList<>();

properties.add( JSON_PATH );

this.properties = Collections.unmodifiableList( properties );

Set< Relationship > relationships = new HashSet<>();

relationships.add( SUCCESS );

this.relationships = Collections.unmodifiableSet( relationships );

}

/**

* Called when ever a flowfile is passed to the processor. The AtomicReference

* is required because in order to have access to the variable in the call-back scope it

* needs to be final. If it were just a final String it could not change.

*/

@Override

public void onTrigger( ProcessContext context, ProcessSession session ) throws ProcessException

{

final ProcessorLog log = this.getLogger();

final AtomicReference< String > value = new AtomicReference<>();

FlowFile flowfile = session.get();

// ------------------------------------------------------------------------

session.read( flowfile, new InputStreamCallback()

{

/**

* Uses Apache Commons to read the input stream out to a string. Uses

* JsonPath to attempt to read the JSON and set a value to the pass on.

* It would normally be best practice in the case of a exception to pass

* the original flowfile to a Error relation point.

*/

@Override

public void process( InputStream in ) throws IOException

{

try

{

String json = IOUtils.toString( in );

String result = JsonPath.read( json, "$.hello" );

value.set( result );

}

catch( Exception e )

{

e.printStackTrace();

log.error( "Failed to read json string." );

}

}

} );

// ------------------------------------------------------------------------

String results = value.get();

if( results != null && !results.isEmpty() )

flowfile = session.putAttribute( flowfile, "match", results );

// ------------------------------------------------------------------------

flowfile = session.write( flowfile, new OutputStreamCallback()

{

/**

* For both reading and writing to the same flowfile. With both the

* outputstream call-back and stream call-back remember to assign it

* back to a flowfile. This processor is not in use in the code, but

* could have been. The example was deliberate to show a way of moving

* data out of callbacks and back in.

*/

@Override

public void process( OutputStream out ) throws IOException

{

out.write( value.get().getBytes() );

}

} );

// ------------------------------------------------------------------------

/* Every flowfile that is generated needs to be deleted or transfered.

*/

session.transfer( flowfile, SUCCESS );

}

/**

* Called every time a property is modified in case we want to do something

* like stop and start a Flowfile over in consequence.

* @param descriptor indicates which property was changed.

* @param oldValue value unvalidated.

* @param newValue value unvalidated.

*/

@Override

public void onPropertyModified( PropertyDescriptor descriptor, String oldValue, String newValue ) { }

}

(See this note for interacting with a flowfile in both input- and output modes at once.)

Before anything else, the processor's init() method is called. It looks like this happens at NiFi start-up rather than later when the processor is actually used.

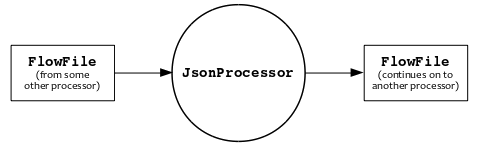

The processor must expose to the NiFi framework all of the Relationships it supports. The relationships are the arcs in the illustration below.

Performing the work. This happens when onTrigger() is called (by the framework).

The onTrigger() method is passed the context and the session, and it must:

A processor won't in fact be triggered, even if it has one or more FlowFile in its queue if the framework, checking downstream destinations (i.e. all other processors) and these are full. There is an annotation, @TriggerWhenAnyDestinationAvailable, which when present will allow the FlowFile to be processed as long as it can advance to the next processor.

@TriggerSerially will make the processor work as single-threaded. However, the processor implementation must still be thread-safe as the thread of execution may change.

Properties are exposed to the web interface via public method, but the properties themselves are locked using Collections.unmodifiableList() (if coded that way). If a property is changed as a processor is running, the latter can react eagerly if it implements onPropertyModified().

(There is no sample code of validating processor properties. Simply, the customValidate() method is called with a validation context and any that describe problems are returned . (But, is this implemented?)

protected Collection< ValidationResult > customValidate( ValidationContext validationContext )

{

return Collections.emptySet();

}

It's possible to augment initialization and enabling lifecycles through Java annotion. Some of these apply only to processor components, some to controller components, some to reporting tasks, to one or more.

There's a way to document various things that reach the user level like properties and relationships. This is done through annotation, see Documenting a Component and see the JSON processor sample code at the top of the class.

"Advanced documentation," what appears when a user right-clicks a processor in the web UI, provides a Usage menu item. What goes in there is done in additionalDetails.html. This file must be dropped into the root of the processor's JAR (NAR) file in subdirectory, docs. See Advanced Documentation.

In practice, this might be a little confusing. Here's an illustration. If I were going to endow my sample JSON processor with such a document, it would live here (see additionalDetails.html below):

~/dev/nifi/projects/json-processor $ tree . ├── pom.xml └── src ├── main │ ├── java │ │ └── rocks │ │ └── nifi │ │ └── examples │ │ └── processors │ │ └── JsonProcessor.java │ └── resources │ ├── META-INF │ │ └── services │ │ └── org.apache.nifi.processor.Processor │ └── docs │ └── rocks.nifi.examples.processors.JsonProcessor │ └── additionalDetails.html └── test └── java └── rocks └── nifi └── examples └── processors └── JsonProcessorTest.java 18 directories, 5 files

When one gets Usage from the context menu, an index.html is generated that "hosts" additionalDetails.html, but covers standard, default NiFi processor topics including auto-generated coverage of the current processor (based on what NiFi knows about properties and the like). additionalDetails.html is only necessary if that index.html documentation is inadequate.

Gathering resources (see dailies for today).

package com.etretatlogiciels.nifi.python.filter;

import org.apache.commons.io.IOUtils;

import org.apache.nifi.annotation.documentation.CapabilityDescription;

import org.apache.nifi.annotation.documentation.Tags;

import org.apache.nifi.flowfile.FlowFile;

import org.apache.nifi.processor.AbstractProcessor;

import org.apache.nifi.processor.ProcessContext;

import org.apache.nifi.processor.ProcessSession;

import org.apache.nifi.processor.ProcessorInitializationContext;

import org.apache.nifi.processor.Relationship;

import org.apache.nifi.processor.exception.ProcessException;

import org.apache.nifi.processor.io.OutputStreamCallback;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.util.Collections;

import java.util.HashSet;

import java.util.Set;

@Tags( { "example", "resources" } )

@CapabilityDescription( "This example processor loads a resource from the nar and writes it to the FlowFile content" )

public class WriteResourceToStream extends AbstractProcessor

{

public static final Relationship REL_SUCCESS = new Relationship.Builder().name( "success" )

.description( "files that were successfully processed" )

.build();

public static final Relationship REL_FAILURE = new Relationship.Builder().name( "failure" )

.description( "files that were not successfully processed" )

.build();

private Set< Relationship > relationships;

private String resourceData;

@Override protected void init( final ProcessorInitializationContext context )

{

final Set< Relationship > relationships = new HashSet<>();

relationships.add( REL_SUCCESS );

relationships.add( REL_FAILURE );

this.relationships = Collections.unmodifiableSet( relationships );

final InputStream resourceStream = Thread.currentThread().getContextClassLoader().getResourceAsStream( "file.txt" );

try

{

this.resourceData = IOUtils.toString( resourceStream );

}

catch( IOException e )

{

throw new RuntimeException( "Unable to load resources", e );

}

finally

{

IOUtils.closeQuietly( resourceStream );

}

}

@Override

public void onTrigger( final ProcessContext context, final ProcessSession session ) throws ProcessException

{

FlowFile flowFile = session.get();

if( flowFile == null )

return;

try

{

flowFile = session.write( flowFile, new OutputStreamCallback()

{

@Override public void process( OutputStream out ) throws IOException

{

IOUtils.write( resourceData, out );

}

} );

session.transfer( flowFile, REL_SUCCESS );

}

catch( ProcessException ex )

{

getLogger().error( "Unable to process", ex );

session.transfer( flowFile, REL_FAILURE );

}

}

}

I need to figure out how to get property values. The samples I've seen so far pretty well avoid that. So, I went looking for it this morning and found it looking in the implementation of GetFile, a processor that comes with NiFI. Let me fill in the context here and highlight the actual statement below:

@TriggerWhenEmpty

@Tags( { "local", "files", "filesystem", "ingest", "ingress", "get", "source", "input" } )

@CapabilityDescription( "Creates FlowFiles from files in a directory. "

+ "NiFi will ignore files it doesn't have at least read permissions for." )

public class GetFile extends AbstractProcessor

{

public static final PropertyDescriptor DIRECTORY = new PropertyDescriptor.Builder()

.name( "input directory" ) // internal name (and used if displayName missing)*

.displayName( "Input Directory" ) // public name seen in UI

.description( "The input directory from which to pull files" )

.required( true )

.addValidator( StandardValidators.createDirectoryExistsValidator( true, false ) )

.expressionLanguageSupported( true )

.build();

.

.

.

@Override

protected void init( final ProcessorInitializationContext context )

{

final List< PropertyDescriptor > properties = new ArrayList<>();

properties.add( DIRECTORY );

.

.

.

}

.

.

.

@OnScheduled

public void onScheduled( final ProcessContext context )

{

fileFilterRef.set( createFileFilter( context ) );

fileQueue.clear();

}

@Override

public void onTrigger( final ProcessContext context, final ProcessSession session ) throws ProcessException

{

final File directory = new File( context.getProperty( DIRECTORY ).evaluateAttributeExpressions().getValue() );

final boolean keepingSourceFile = context.getProperty( KEEP_SOURCE_FILE ).asBoolean();

final ProcessorLog logger = getLogger();

.

.

.

}

}

* See Sets a unique id for the property. This field is optional and if not specified the PropertyDescriptor's name will be used as the identifying attribute. However, by supplying an id, the PropertyDescriptor's name can be changed without causing problems. This is beneficial because it allows a User Interface to represent the name differently.

Originally, NiFi just had one of these (name) and, obviously, when a spelling mistake begged for correction, because this is the field in flow.xml, any change immediately broke the processor.

See Processor PropertyDescriptor name vs. displayName below.

Today I was experiencing the difficulty of writing tests for a NiFi processor only to learn that there are helps from NiFi for this. I found the test for the original, JSON processor example.

package rocks.nifi.examples.processors;

import java.io.ByteArrayInputStream;

import java.io.IOException;

import java.io.InputStream;

import java.util.List;

import org.apache.commons.io.IOUtils;

import org.junit.Test;

import static org.junit.Assert.assertTrue;

import org.apache.nifi.util.TestRunner;

import org.apache.nifi.util.TestRunners;

import org.apache.nifi.util.MockFlowFile;

public class JsonProcessorTest

{

@Test

public void testOnTrigger() throws IOException

{

// Content to be mock a json file

InputStream content = new ByteArrayInputStream( "{\"hello\":\"nifi rocks\"}".getBytes() );

// Generate a test runner to mock a processor in a flow

TestRunner runner = TestRunners.newTestRunner( new JsonProcessor() );

// Add properites

runner.setProperty( JsonProcessor.JSON_PATH, "$.hello" );

// Add the content to the runner

runner.enqueue( content );

// Run the enqueued content, it also takes an int = number of contents queued

runner.run( 1 );

// All results were processed with out failure

runner.assertQueueEmpty();

// If you need to read or do aditional tests on results you can access the content

List< MockFlowFile > results = runner.getFlowFilesForRelationship( JsonProcessor.SUCCESS );

assertTrue( "1 match", results.size() == 1 );

MockFlowFile result = results.get( 0 );

String resultValue = new String( runner.getContentAsByteArray( result ) );

System.out.println( "Match: " + IOUtils.toString( runner.getContentAsByteArray( result ) ) );

// Test attributes and content

result.assertAttributeEquals( JsonProcessor.MATCH_ATTR, "nifi rocks" );

result.assertContentEquals( "nifi rocks" );

}

}

What does it do? NiFi TestRunner mocks out the NiFi framework and creates all the underpinnings I was trying today to create. This makes it so that calls inside onTrigger() don't summarily crash. I stepped through the test (above) to watch it work. All the references to the TestRunner object are ugly and only to set up what the framework would create for the processor, but it does work.

Had to work through these problems...

java.lang.AssertionError: Processor has 2 validation failures: 'basename' validated against 'CCD_Sample' is invalid because 'basename' is not a supported property 'doctype' validated against 'XML' is invalid because 'doctype' is not a supported property

—these properties don't even exist.

Caused by: java.lang.IllegalStateException: Attempting to Evaluate Expressions but PropertyDescriptor[Path to Python Interpreter] indicates that the Expression Language is not supported. If you realize that this is the case and do not want this error to occur, it can be disabled by calling TestRunner.setValidateExpressionUsage(false)

—so I disabled it. However, when this error or something like it is issued in the debugger:

java.lang.AssertionError: Processor has 1 validation failures: 'property name' validated against 'property default value' is invalid because 'property name' is not a supported property

it's likely because you didn't add validation to the property when defined:

.addValidator( StandardValidators.NON_EMPTY_VALIDATOR )

Anyway, let's keep going...

Then, I reached the session.read() bit which appeared to execute, but processing never returned. So, I killed it, set a breakpoint on the session.write(), and relaunched. It got there. I set a breakpoint on the while( ( line = reply.readLine() ) != null ) and it got there. I took a single step more and, just as before, processing never returned.

Then I break-pointed out.write() and the first call to session.putAttribute() and relaunched. Processing never hit those points.

Will continue this tomorrow.

I want to solve my stream problems in python-filter. Note that the source code to GetFile is not a good example of what I'm trying to do because I'm getting the data to work on from (the session.read()) stream, passing it to the Python filter, then reading it back and spewing it down (the session.write()) output stream. For the (session.read()) input stream, ...

...maybe switch from readLine() to a byte-by-byte processing? But, all the examples in all kinds of situations (HTTP, socket, file, etc.) are using readLine().

The old Murphism about a defective appliance demonstrated to a competent technician will fail to fail applies here. My code was actually working. What was making me think it wasn't? Uh, well, for one thing, all the flowfile = session.putAttributes( flowfile, ... ) calls were broken for the same reason (higher up yesterday) other properties stuff wasn't.

Where am I?

I had to append "quit" to the end of my test data because that's the only way to tell the Python script that I'm done sending data.

So, I asked Nathan, who determined using a test:

import sys

while True:

input = sys.stdin.readline().strip()

if input == '':

break

upperCase = input.upper()

print( upperCase )

master ~/dev/python-filter $ echo "Blah!" | python poop.py

BLAH!

master ~/dev/python-filter $

...that checking the return from sys.stdin.readline() for being zero-length tells us to drop out of the read loop. But, this doesn't work in my code! Why?

I reasoned that in my command-line example, the pipe is getting closed. That's not happening in my NiFi code. So, I inserted a statement to close the pipe (see hilghted line below) as soon as finished writing what the input flowfile was offering:

session.read( flowfile, new InputStreamCallback()

{

/**

* There is probably a much speedier way than to read the flowfile line

* by line. Check it out.

*/

@Override

public void process( InputStream in ) throws IOException

{

BufferedReader input = new BufferedReader( new InputStreamReader( in ) );

String line;

while( ( line = input.readLine() ) != null )

{

output.write( line + "\n" );

output.flush();

}

output.close();

}

} );

| StandardValidators | Description |

|---|---|

| ATTRIBUTE_KEY_VALIDATOR | ? |

| ATTRIBUTE_KEY_PROPERTY_NAME_VALIDATOR | ? |

| INTEGER_VALIDATOR | is an integer value |

| POSITIVE_INTEGER_VALIDATOR | is an integer value above 0 |

| NON_NEGATIVE_INTEGER_VALIDATOR | is an integer value above -1 |

| LONG_VALIDATOR | is a long integer value |

| POSITIVE_LONG_VALIDATOR | is a long integer value above 0 |

| PORT_VALIDATOR | is a valid port number |

| NON_EMPTY_VALIDATOR | is not an empty string |

| BOOLEAN_VALIDATOR | is "true" or "false" |

| CHARACTER_SET_VALIDATOR | contains only valid characters supported by JVM |

| URL_VALIDATOR | is a well formed URL |

| URI_VALIDATOR | is a well formed URI |

| REGULAR_EXPRESSION_VALIDATOR | is a regular expression |

| ATTRIBUTE_EXPRESSION_LANGUAGE_VALIDATOR | is a well formed key-value pair |

| TIME_PERIOD_VALIDATOR | is of format duration time unit where duration is a non-negative integer and time unit is a supported type, one of { nanos, millis, secs, mins, hrs, days }. |

| DATA_SIZE_VALIDATOR | is a non-nil data size |

| FILE_EXISTS_VALIDATOR | is a path to a file that exists |

I'm still struggling to run tests after adding in all the properties I wish to support. I get:

'Filter Name' validated against 'filter.py' is invalid because 'Filter Name' is not a supported property

...and I don't understand why. Clearly, there's no testing of NiFi with corresponding failure cases. No one gives an explanation of this error, how to fix it, or what's involved in creating a proper, supported property. It's trial and error. I lucked out with PYTHON_INTERPRETER and FILTER_REPOSITORY.

However, I found that they all began to pass as soon as I constructed them with validators. I had left validation off because a) as inferred above I wonder about validation and b) at least the last four are optional.

Now, the code is running again. However,

final String doctype = flowfile.getAttribute( DOCTYPE ); final String pathname = flowfile.getAttribute( PATHNAME ); final String filename = flowfile.getAttribute( FILENAME ); final String basename = flowfile.getAttribute( BASENAME );

...gives me only FILENAME, because the NiFi TestRunner happens to set that one up. How does it know? The others return null, yet I've tried to set them. What's wrong is the corresondence between runner.setProperty() and the property and flowfile.getAttributes().

Let's isolate the properties handling (only) from the NiFi rocks example.

// JsonProcessor.java:

private List< PropertyDescriptor > properties;

public static final PropertyDescriptor JSON_PATH = new PropertyDescriptor.Builder()

.name( "Json Path" )

.required( true )

.addValidator( StandardValidators.NON_EMPTY_VALIDATOR )

.build();

List< PropertyDescriptor > properties = new ArrayList<>();

properties.add( JSON_PATH );

this.properties = Collections.unmodifiableList( properties );

// JsonProcessorTest.java:

runner.setProperty( JsonProcessor.JSON_PATH, "$.hello" );

Hmmmm... I think there is a complete separation between flowfile attributes and processor properties. I've reworked all of that, and my code (still/now) works though there are unanswered questions.

runner.assertQueueEmpty() will fail (and stop testing) if there are any flowfiles left untransferred in the queue. Did you forget to transfer a flowfile? Did you lose your handle to a flowfile because you used the variable to point at another flowfile before you had transferred it? Etc.

NiFi logging example...

final ProcessorLog logger = getLogger();

String key = "someKey";

String value = "someValue";

logger.warn( "This is a warning message" );

logger.info( "This is a message with some interpolation key={}, value={}", new String[]{ key, value } );

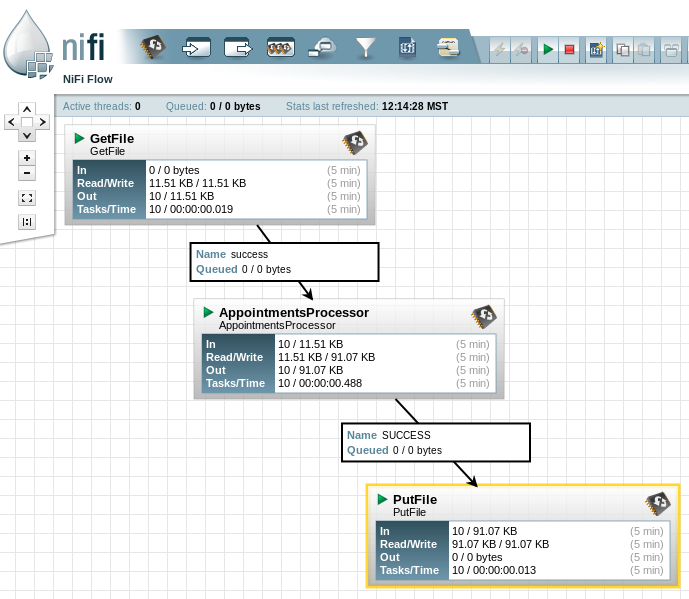

My second NiFi processor at work.

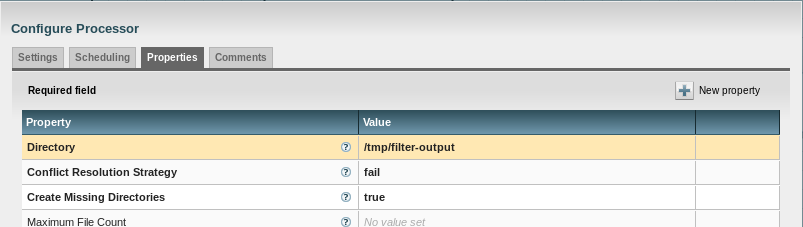

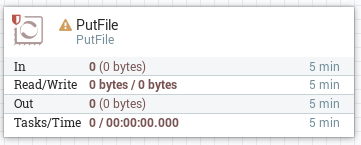

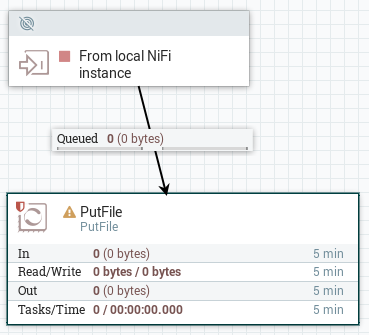

Documenting practical consumption of GetFile and PutFile processors (more or less "built-ins") of NiFi. These are the differences from default properties:

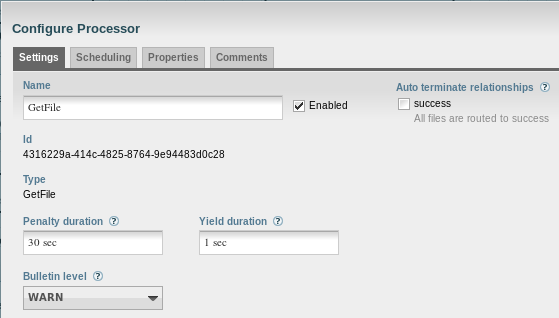

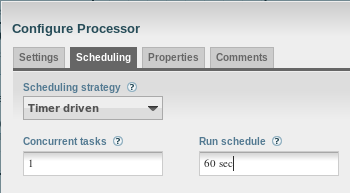

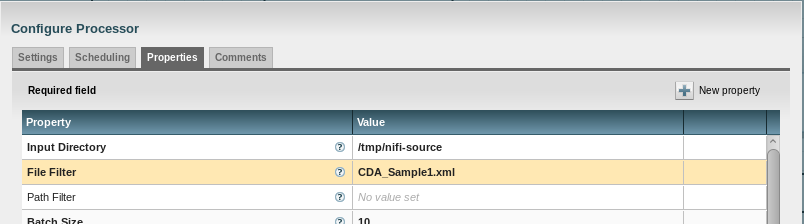

GetFile: Configure Processor

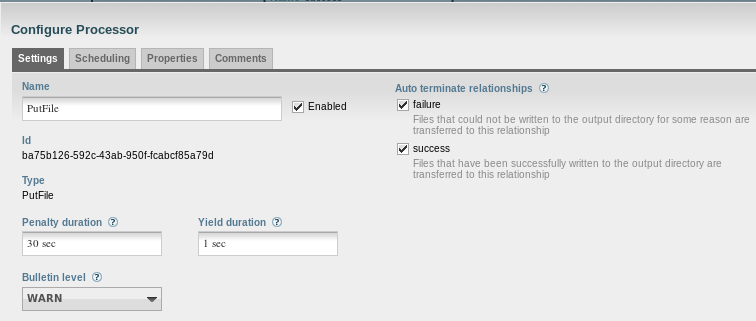

PutFile: Configure Processor

My own AppointmentsProcessor: Configure Processor

The UI sequence appears to be:

Looking to change my port number from 8080, the property is

nifi.web.http.port=8080

...and this is in ${NIFI_HOME}/conf/nifi.properties. Also of interest may be:

nifi.web.http.host nifi.web.https.host nifi.web.https.port

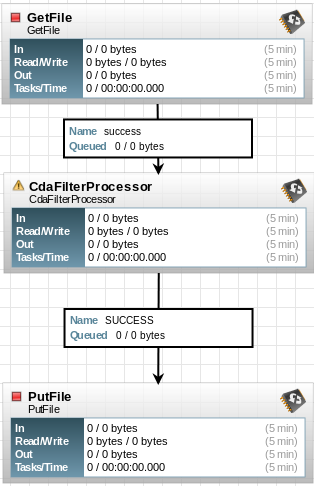

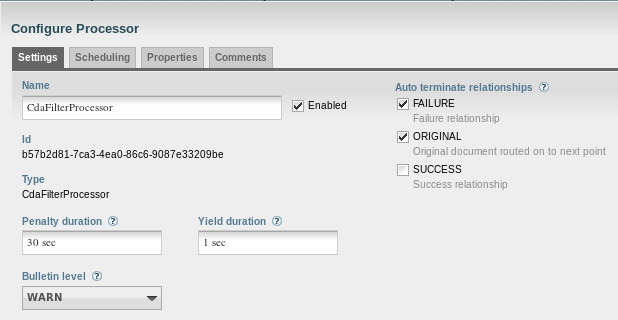

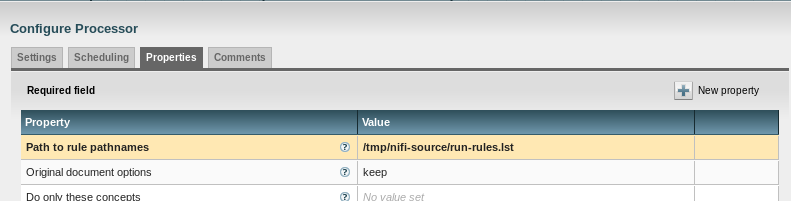

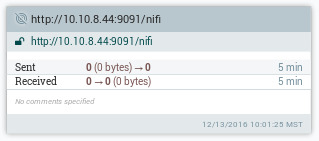

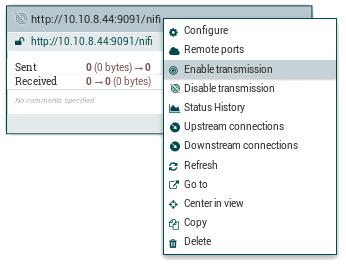

I'm testing actual NiFi set-ups for cda-breaker and cda-filter this morning. The former went just fine, but I'm going to document the setting up of a pipeline with the latter since I see I have not completely documented such a thing before.

I'm going to set up this:

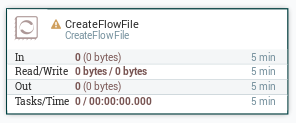

I accomplish this by clicking, dragging and dropping three new processors to the workspace. Here they are and their configurations:

Just the defaults...

The 60 seconds keep the processor from firing over and over again (on the same file) before I can stop the pipeline: I only need it to fire once.

Get CDA_Sample1.xml out of /tmp/nifi-sources for the test.

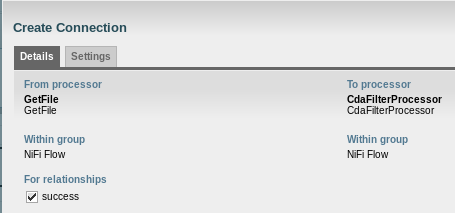

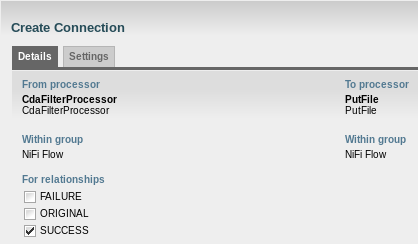

From GetFile, a connection to CdaFilterProcessor must be created for the success relationship (I only pass this file along if it exists):

From CdaFilterProcessor, a connection to PutFile must be created for the original and success relationships:

(Of course, you must ensure that the source file, rules list and rules are in place for the test to have any chance of running.)

Once the three processors and two connections set up, you can turn the processors on in any order, but only once two are on at the same time, with data in the pipeline, will there be processing. Logically, GetFile if the first to light up.

Have left off busy with other stuff. I just found out that the reason additionalDetails.html doesn't work is because one's expected—and this isn't what the JSON example I used to get started does—to produce a JAR of one's processor, then only wrap that in the NAR.

Without that, your trip to right-click the processor, then choosing Usage is unrewarding.

Attacking NiFi attributes today. You get them off a flow file. However, to add an attribute, putAttribute() returns a new or wrapped flow file. The attribute won't be there if you look at the old flow file. In this snippet, we just forget the old flow file and use the new one under the same variable.

FlowFile flowfile = session.get(); String attribute-value = flowfile.getAttribute( "attribute-name" ); flowfile = session.putAttribute( flowfile, attributeName, attributeValue );

Please see Notes on Remote Debugging in Apache Nifi.

An exception that is not handled by the NiFi processor is therefore handled by the framework by doing a session roll-back and administrative yield. The flowfile is put back into the queue whence it was taken before the exception. It will continue to be submitted to the processor though the processor will slow down (because yielding). Once solution is to fix the processor to catch then gate failure down a relationship.

Got "DocGenerator Unable to document: class xyz / NullPointerException" reported in nifi-app.log

This occurs (at least) when your processor's relationships field (and the return from getRelationships()) is null.

2016-04-14 14:23:26,529 WARN [main] o.apache.nifi.documentation.DocGenerator Unable to document: class com.etretatlogiciels.nifi.processor.HeaderFooterIdentifier

java.lang.NullPointerException: null

at org.apache.nifi.documentation.html.HtmlProcessorDocumentationWriter.writeRelationships(HtmlProcessorDocumentationWriter.java:200) ~[nifi-documentation-0.4.1.jar:0.4.1]

at org.apache.nifi.documentation.html.HtmlProcessorDocumentationWriter.writeAdditionalBodyInfo(HtmlProcessorDocumentationWriter.java:47) ~[nifi-documentation-0.4.1.jar:0.4.1]

at org.apache.nifi.documentation.html.HtmlDocumentationWriter.writeBody(HtmlDocumentationWriter.java:129) ~[nifi-documentation-0.4.1.jar:0.4.1]

at org.apache.nifi.documentation.html.HtmlDocumentationWriter.write(HtmlDocumentationWriter.java:67) ~[nifi-documentation-0.4.1.jar:0.4.1]

at org.apache.nifi.documentation.DocGenerator.document(DocGenerator.java:115) ~[nifi-documentation-0.4.1.jar:0.4.1]

at org.apache.nifi.documentation.DocGenerator.generate(DocGenerator.java:76) ~[nifi-documentation-0.4.1.jar:0.4.1]

at org.apache.nifi.NiFi.(NiFi.java:123) [nifi-runtime-0.4.1.jar:0.4.1]

at org.apache.nifi.NiFi.main(NiFi.java:227) [nifi-runtime-0.4.1.jar:0.4.1]

Here's how to document multiple dynamic properties. For only one, just use @DynamicProperty alone.

@SideEffectFree

@Tags( { "SomethingProcessor" } )

@DynamicProperties( {

@DynamicProperty(

name = "section-N.name",

value = "Name",

supportsExpressionLanguage = false,

description = "Add the name to identify a section."

+ " N is a required integer to unify the three properties especially..."

+ " Example: \"section-1.name\" : \"illness\"."

),

@DynamicProperty(

name = "section-N.pattern",

value = "Pattern",

supportsExpressionLanguage = false,

description = "Add the pattern to identify a section."

+ " N is a required integer to unify the three properties especially..."

+ " Example: \"section-1.pattern\" : \"History of Illness\"."

),

@DynamicProperty(

name = "section-N.NLP",

value = "\"on\" or \"off\"",

supportsExpressionLanguage = false,

description = "Add whether to turn NLP on or off."

+ " N is a required integer to unify the three properties especially..."

+ " Example: \"section-1.nlp\" : \"on\"."

)

} )

public class SomethingProcessor extends AbstractProcessor

{

...

Need to set up NiFi to work from home. First, download the tarball containing binaries from Apache NiFi Downloads and explode it.

~/Downloads $ tar -zxf nifi-0.6.1-bin.tar.gz ~/Downloads $ mv nifi-0.6.1 ~/dev ~/Downloads $ cd ~/dev ~/dev $ ln -s ./nifi-0.6.1/ ./nifi

Edit nifi-0.6.1/conf/bootstrap.conf and add my username.

# Username to use when running NiFi. This value will be ignored on Windows. run.as=russ

Launch NiFi.

~/dev/nifi/bin $ ./nifi.sh start

Launch browser.

http://localhost:8080/nifi/

And there we are!

How to inject flow-file attributes:

TestRunner runner = TestRunners.newTestRunner( new VelocityTemplating() ); Map< String, String > flowFileAttributes = new HashMap<>(); flowFileAttributes.put( "name", "Velocity-templating Unit Test" ); // used in merging "Hello $name!" . . . runner.enqueue( DOCUMENT, flowFileAttributes ); . . .

If you install NiFi as a service, then wish to remove it, there are some files (these might not be the exact names) you'll need to remove:

Some useful comments on examing data during flow and debugging NiFi processors.

Andy LoPresto:

NiFi is intentionally designed to allow you to make changes on a very tight cycle while interacting with live data. This separates it from a number of tools that require lengthy deployment processes to push them to sandbox/production environments and test with real data. As one of the engineers says frequently, "NiFi is like digging irrigation ditches as the water flows, rather than building out a sprinkler system in advance."

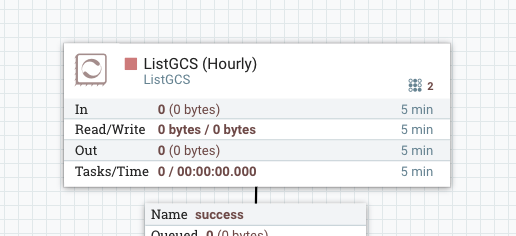

Each processor component and queue will show statistics about the data they are processing and you can see further information and history by going into the Stats dialog of the component (available through the right-click context menu on each element).

To monitor actual data (such as the response of an HTTP request or the result of a JSON split), I'd recommend using the LogAttribute processor. You can use this processor to print the value of specific attributes, all attributes, flowfile payload, expression language queries, etc. to a log file and monitor the content in real-time. I'm not sure if we have a specific tutorial for this process, but many of the tutorials and videos for other flows include this functionality to demonstrate exactly what you are looking for. If you decide that the flow needs modification, you can also replay flowfiles through the new flow very easily from the provenance view.

One other way to explore the processors, is of course to write a unit test and evaluate the result compared to your expected values. This is more advanced and requires downloading the source code and does not use the UI, but for some more complicated usages or for people familiar with the development process, NiFi provides extensive flow mocking capabilities to allow developers to do this.

Matt Burgess:

As of at least 0.6.0, you can view the items in a queued connection. So for your example, you can have a GetHttp into a SplitJson, but don't start the SplitJson, just the GetHttp. You will see any flowfiles generated by GetHttp queued up in the success (or response?) connection (whichever you have wired to SplitJson). Then you can right-click on the connection (the line between the processors) and choose List Queue. In that dialog you can choose an element by clicking on the Info icon ('i' in a circle) and see the information about it, including a View button for the content.

The best part is that you don't have to do a "preview" run, then a "real" run. The data is in the connection's queue, so you can make alterations to your SplitJson, then start it to see if it works. If it doesn't, stop it and start the GetHttp again (if stopped) to put more data in the queue. For fine-grained debugging, you can temporarily set the Run schedule for the SplitJson to something like 10 seconds, then when you start it, it will likely only bring in one flow file, so you can react to how it works, then stop it before it empties the queue.

Joe Percivall:

Provenance is useful for replaying events but I also find it very useful for debugging processors/flows as well. Data Provenance is a core feature of NiFi and it allows you to see exactly what the FlowFile looked like (attributes and content) before and after a processor acted on it as well as the ability to see a map of the journey that FlowFile underwent through your flow. The easiest way to see the provenance of a processor is to right click on it and then click "Data provenance".

Before explaining how to work with templates and process groups, it's necessary to define certain visual elements of the NiFi workspace. I'm just getting around to this because my focus until now has been to write and debug custom processors for others' use.

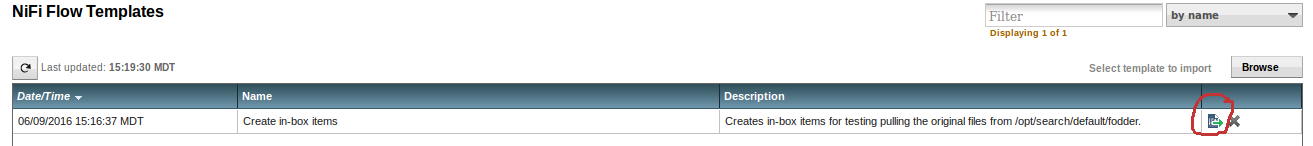

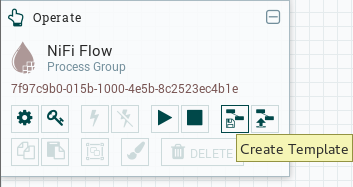

Here's how to make a template in NiFi. Templates are used to reproduce work flows without having to rebuild them, from all their components and configuration, by hand. I don't know how yet to "carry" templates around between NiFi installations.

Go here for the best illustrated guide demonstrating Apache NiFi templates, or, better yet, go here: NiFi templating notes.

Here's a video on creating templates by the way. And here are the steps:

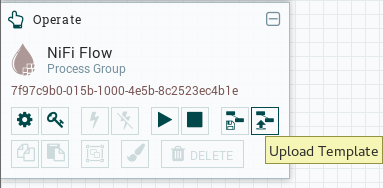

Here's how to consume an existing template in your NiFi workspace:

You can turn a template into a process group in order to push the detail of how it's built down to make your work flow simpler at the top level.

Here's a video on process groups.

Sometimes you already have a flow you want to transform into a process group.

You can zoom in (or out) on the process group. It looks like a processor because it performs the same function as a processor, only it does a lot more since it subsumes multiple processors.

However, a process group must have explicit input- and output ports in order to treat it as a processor:

...and the current installation too. Here's a video on exporting templates.

Note: if your template uses processors or other components that don't exist in the target NiFi installation (that your template is taken to), then it can be imported, but when attempting to use it in that installation, NiFi will issue an error (in a modeless alert).

This uses the Template Management dialog too.

Some NiFi notes here on logging...

Beginning in NiFi 1.0, it can be said that it used to be that NiFi logging would be done by each processor at the INFO level to say what it's doing for each flowfile. However, this became extremely abusive in terms of logging diskspace, so the the minimum level for processors was instead set to WARN. In conf/logback.xml this can be changed back by setting the log level of org.apache.nifi.processors.

Prior to 1.0, however, one saw this:

. . . <logger name="org.apache.nifi" level="INFO" /> . . .

Today I'm studying the NiFi ReST (NiFi API) which provides programmatic access to command and control of a NiFi instance (in real time). Specifically, I'm interested in monitoring, indeed, in obtaining an answer to questions like:

Also, I'm interested in:

But first, let's break this ReST control down by service. I'm not interested in all of these today, but since I've got the information in a list, here it is:

| Service | Function(s) |

|---|---|

| Controller | Get controller configuration, search the flow, manage templates, system diagnostics |

| Process Groups | Get the flow, instantiate a template, manage sub groups, monitor component status |

| Processors | Create a processor, set properties, schedule |

| Connections | Create a connection, set queue priority, update connection destination |

| Input Ports | Create an input port, set remote port access control |

| Output Ports | Create an output port, set remote port access control |

| Remote Process Groups | Create a remote group, enable transmission |

| Labels | Create a label, set label style |

| Funnels | Manage funnels |

| Controller Services | Manage controller services, update controller service references |

| Reporting Tasks | Manage reporting tasks |

| Cluster | View node status, disconnect nodes, aggregate component status |

| Provenance | Query provenance, search event lineage, download content, replay |

| History | View flow history, purge flow history |

| Users | Update user access, revoke accounts, get account details, group users |

Let me make a quick list of end-points that pique my interest and I'll try them out, make further notes, etc.

| Service | Verb | URI | Notes |

|---|---|---|---|

| Controller | GET | /controller/config | retrieve configuration |

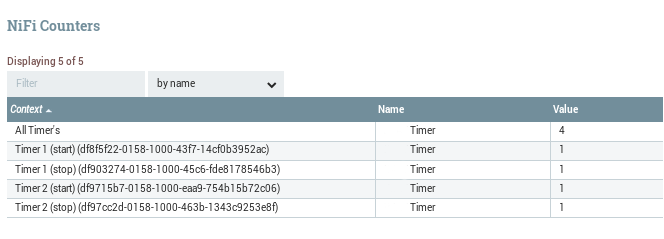

| GET | /controller/counter | current counters |

Here's dynamic access to all of the service documentation: https://nifi.apache.org/docs/nifi-docs/rest-api/

If I'm accessing my NiFi canvas (flow) using http://localhost:8080/nifi, I use this URI to reach the ReST end-points:

http://localhost:8080/nifi-api/ service / end-point

For example, http://localhost:8080/nifi-api/controller/config returns (I'm running this against a NiFI flow that's got two flows of 3 and 2 processors with relationships between them that have sat for a couple of weeks unused) this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<controllerConfigurationEntity>

<revision>

<clientId>87718998-e57b-43ac-9e65-15f2b8e0feaa</clientId>

</revision>

<config>

<autoRefreshIntervalSeconds>30</autoRefreshIntervalSeconds>

<comments></comments>

<contentViewerUrl>/nifi-content-viewer/</contentViewerUrl>

<currentTime>13:23:01 MDT</currentTime>

<maxEventDrivenThreadCount>5</maxEventDrivenThreadCount>

<maxTimerDrivenThreadCount>10</maxTimerDrivenThreadCount>

<name>NiFi Flow</name>

<siteToSiteSecure>true</siteToSiteSecure>

<timeOffset>-21600000</timeOffset>

<uri>http://localhost:8080/nifi-api/controller</uri>

</config>

</controllerConfigurationEntity>

I'm using the Firefox </> RESTED client. What about JSON? Yes, RESTED allows me to add Accept: application/json:

{

"revision":

{

"clientId": "f841561c-60c6-4d9b-a3ce-d2202789eef7"

},

"config":

{

"name": "NiFi Flow",

"comments": "",

"maxTimerDrivenThreadCount": 10,

"maxEventDrivenThreadCount": 5,

"autoRefreshIntervalSeconds": 30,

"siteToSiteSecure": true,

"currentTime": "14:06:18 MDT",

"timeOffset": -21600000,

"contentViewerUrl": "/nifi-content-viewer/",

"uri": "http://localhost:8080/nifi-api/controller"

}

}

I wanted to see the available processors in a list. I did this via: http://localhost:8080/nifi-api/controller/processor-types

However, I could not figure out what to supply as {processorId} to

controller/history/processors/{processorId}. I decided to use a processor

name:

http://localhost:8080/nifi-api/controller/history/processors/VelocityTemplating

This did the trick:

{

"revision":

{

"clientId": "86082d22-9fb2-4aea-86bc-efe062861d51"

},

"componentHistory":

{

"componentId": "VelocityTemplating",

"propertyHistory": {}

}

}

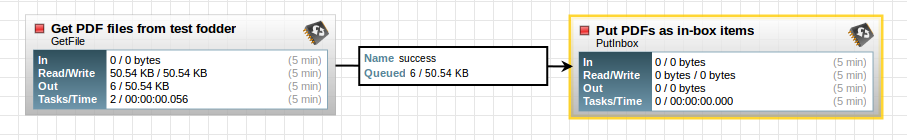

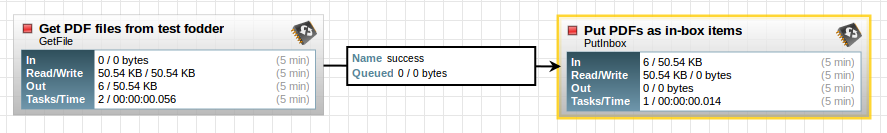

I notice that I can't see the name I give the processor. For example, I have a GetFile that I named Get PDF files from test fodder. I can't find any API that returns that.

Now, about instances of processors. The forum told me that, even if I'm not using any process groups, there is one process group for what I am using, namely root; this URI is highlighted below:

http://localhost:8080/nifi-api/controller/process-groups/root/status

{

"revision":

{

"clientId": "98d70ac0-476c-4c6b-bcbc-3ec91193d994"

},

"processGroupStatus":

{

"bulletins": [],

"id": "50e3886b-984d-4e90-a889-d46dc53c4afc",

"name": "NiFi Flow",

"connectionStatus":

[

{

"id": "f3a032d6-6bd1-4cc5-ab5e-8923e131c222",

"groupId": "50e3886b-984d-4e90-a889-d46dc53c4afc",

"name": "success",

"input": "0 / 0 bytes",

"queuedCount": "0",

"queuedSize": "0 bytes",

"queued": "0 / 0 bytes",

"output": "0 / 0 bytes",

"sourceId": "9929acf6-aac7-46d9-a0dd-f239d66e1594",

"sourceName": "Get PDFs from inbox",

"destinationId": "c396af51-2221-4bfd-ba31-5f4b5913b910",

"destinationName": "Generate XML from advance directives template"

},

{

"id": "e5ba7858-8301-4fd0-885e-6fb63b336e53",

"groupId": "50e3886b-984d-4e90-a889-d46dc53c4afc",

"name": "success",

"input": "0 / 0 bytes",

"queuedCount": "0",

"queuedSize": "0 bytes",

"queued": "0 / 0 bytes",

"output": "0 / 0 bytes",

"sourceId": "c396af51-2221-4bfd-ba31-5f4b5913b910",

"sourceName": "Generate XML from advance directives template",

"destinationId": "1af4a618-ca9b-4697-81b5-3ba1bbfaba6b",

"destinationName": "Put generated XMLs to output folder"

},

{

"id": "53e4a0e9-ce20-4e4d-84f3-d12504edeccc",

"groupId": "50e3886b-984d-4e90-a889-d46dc53c4afc",

"name": "success",

"input": "0 / 0 bytes",

"queuedCount": "3",

"queuedSize": "25.27 KB",

"queued": "3 / 25.27 KB",

"output": "0 / 0 bytes",

"sourceId": "4435c701-0719-4231-8867-671db08dd813",

"sourceName": "Get PDF files from test fodder",

"destinationId": "5f28baeb-b24c-4c3f-b6d0-c3284553d6ac",

"destinationName": "Put PDFs as in-box items"

}

],

"processorStatus":

[

{

"id": "c396af51-2221-4bfd-ba31-5f4b5913b910",

"groupId": "50e3886b-984d-4e90-a889-d46dc53c4afc",

"name": "Generate XML from advance directives template",

"type": "VelocityTemplating",

"runStatus": "Stopped",

"read": "0 bytes",

"written": "0 bytes",

"input": "0 / 0 bytes",

"output": "0 / 0 bytes",

"tasks": "0",

"tasksDuration": "00:00:00.000",

"activeThreadCount": 0

},

{

"id": "9929acf6-aac7-46d9-a0dd-f239d66e1594",

"groupId": "50e3886b-984d-4e90-a889-d46dc53c4afc",

"name": "Get PDFs from inbox",

"type": "GetInbox",

"runStatus": "Stopped",

"read": "0 bytes",

"written": "0 bytes",

"input": "0 / 0 bytes",

"output": "0 / 0 bytes",

"tasks": "0",

"tasksDuration": "00:00:00.000",

"activeThreadCount": 0

},

{

"id": "5f28baeb-b24c-4c3f-b6d0-c3284553d6ac",

"groupId": "50e3886b-984d-4e90-a889-d46dc53c4afc",

"name": "Put PDFs as in-box items",

"type": "PutInbox",

"runStatus": "Stopped",

"read": "0 bytes",

"written": "0 bytes",

"input": "0 / 0 bytes",

"output": "0 / 0 bytes",

"tasks": "0",

"tasksDuration": "00:00:00.000",

"activeThreadCount": 0

},

{

"id": "1af4a618-ca9b-4697-81b5-3ba1bbfaba6b",

"groupId": "50e3886b-984d-4e90-a889-d46dc53c4afc",

"name": "Put generated XMLs to output folder",

"type": "PutFile",

"runStatus": "Stopped",

"read": "0 bytes",

"written": "0 bytes",

"input": "0 / 0 bytes",

"output": "0 / 0 bytes",

"tasks": "0",

"tasksDuration": "00:00:00.000",

"activeThreadCount": 0

},

{

"id": "4435c701-0719-4231-8867-671db08dd813",

"groupId": "50e3886b-984d-4e90-a889-d46dc53c4afc",

"name": "Get PDF files from test fodder",

"type": "GetFile",

"runStatus": "Stopped",

"read": "0 bytes",

"written": "0 bytes",

"input": "0 / 0 bytes",

"output": "0 / 0 bytes",

"tasks": "0",

"tasksDuration": "00:00:00.000",

"activeThreadCount": 0

}

],

"processGroupStatus": [],

"remoteProcessGroupStatus": [],

"inputPortStatus": [],

"outputPortStatus": [],

"input": "0 / 0 bytes",

"queuedCount": "3",

"queuedSize": "25.27 KB",

"queued": "3 / 25.27 KB",

"read": "0 bytes",

"written": "0 bytes",

"output": "0 / 0 bytes",

"transferred": "0 / 0 bytes",

"received": "0 / 0 bytes",

"sent": "0 / 0 bytes",

"activeThreadCount": 0,

"statsLastRefreshed": "09:53:12 MDT"

}

}

This sort of hits the jackpot. What we see above are:

So, let's see if we can't dig into this stuff above using Python.

My Python's not as good as it was a year ago when I last coded in this language, but Python is extraordinarily useful, as compared to anything else (yeah, okay, I'm not too keen on Ruby as compared to Python and I'm certainly down on Perl) for writing these scripts. To write this in Java, you'd end up with fine software, but it is more work (to juggle the JSON-to-POJO transformation) and of course, a JAR isn't as brainlessly usable as your command-line is mucker than in a proper scripting language.

This illustrates a cautious traversal down to processor names (and types) which shows the way because there are countless additional tidbits to show like process group-wide status. Remember, this is a weeks-old NiFi installation that's just been sitting there. I've made no attempt to shake it around so that the status numbers get more interesting. Here's my first script:

import sys

import json

import globals

import httpclient

def main( argv ):

if( len( argv ) > 1 and argv[ 1 ] == '-d' or argv[ 1 ] == '--debug' ):

DEBUG = True

URI = globals.NIFI_API_URI( "controller" ) + 'process-groups/root/status'

print( 'URI = %s' % URI )

response = httpclient.get( URI )

jsonDict = json.loads( response )

print

print 'Keys back from request:'

print list( jsonDict.keys() )

processGroupStatus = jsonDict[ 'processGroupStatus' ]

print

print 'processGroupStatus keys:'

print list( processGroupStatus.keys() )

connectionStatus = processGroupStatus[ 'connectionStatus' ]

print

print 'connectionStatus keys:'

print connectionStatus

processorStatus = processGroupStatus[ 'processorStatus' ]

print

print 'processorStatus:'

print ' There are %d processors listed.' % len( processorStatus )

for processor in processorStatus:

print( ' (%s): %s' % ( processor[ 'type' ], processor[ 'name' ] ) )

if __name__ == "__main__":

if len( sys.argv ) <= 1:

args = [ 'first.py' ]

main( args )

sys.exit( 0 )

elif len( sys.argv ) >= 1:

sys.exit( main( sys.argv ) )

Output:

URI = http://localhost:8080/nifi-api/controller/process-groups/root/status

Keys back from request:

[u'processGroupStatus', u'revision']

processGroupStatus keys:

[u'outputPortStatus', u'received', u'queuedCount', u'bulletins', u'activeThreadCount', u'read', u'queuedSize', ...]

connectionStatus keys:

[{u'queuedCount': u'0', u'name': u'success', u'sourceId': u'9929acf6-aac7-46d9-a0dd-f239d66e1594', u'queuedSize': u'0 bytes' ...]

processorStatus:

There are 5 processors listed.

(VelocityTemplating): Generate XML from advance directives template

(GetInbox): Get PDFs from inbox

(PutInbox): Put PDFs as in-box items

(PutFile): Put generated XMLs to output folder

(GetFile): Get PDF files from test fodder

I'm convinced that Reporting Tasks, which are written very similarly to Processors, but not deployed like them (so, using a reporting task isn't so much a matter of tediously clicking and dragging boxes, but of enabling task reporting including custom task reporting for the whole flow or process group) are the best approach to monitoring. See also Re: Workflow monitoring/notifications.

(The second of the three NiFi architectural components is the Controller Service.)

The NiFi ReST end-points are very easy to use, but there is some difficulty in their semantics which appear a bit random still to my mind. The NiFi canvas or web UI is built atop these end-points (of course) and It is possible to follow what's happening using the Developer tools in your browser (Firefox: Developer Tools → Network, then click desired method to see URI, etc.). I found that a little challenging because I've been pretty much a back-end guy who's never used the browser developer intensively.

I found it very easy, despite not being a rockstar Python guy, to write simple scripts to hit NiFi. And I did. However, realize that the asynchrony of this approach presents a challenge given that a work flow can be very dynamic. Examining something in a NiFi flow from Python is like trying to inspect a flight to New York to see if your girlfriend is sitting next to your rival as it passes over Colorado, Nebraska, Iowa, etc. It's just not the best way. The ids of individual things you're looking for are not always easily ascertained and can change as the plane transits from Nebraska to Iowa to Illinois. Enabling task report or even writing a custom reporting task and enabling that strikes me as the better solution though it forces us to design or conceive of what we want to know up front. This isn't necessarily bad in the long-run.

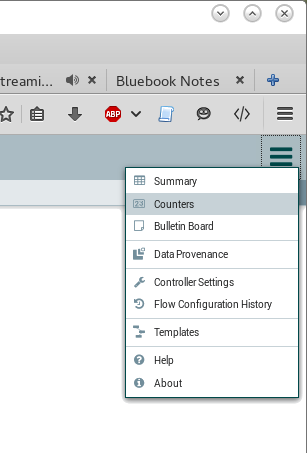

Gain access to reporting tasks by clicking the Controller Settings in the Management Toolbar.

Here's a coded example, ControllerStatusReportingTask. The important methods to implement appear to be:

The onTrigger( ReportingContext context ) method is given access to reporting context which carries the following (need to investigate how this is helpful):

Yes, it just already exists, right? So use it? ControllerStatusReportingTask, that yields the following, which may be sufficient to most of our needs.

Information logged:

By default, the output from this reporting task goes to the NiFi log, but can be redirected elsewhere by modifying conf/logback.xml. (This would constitute a new logger, something like?)

<appender name="STATUS_FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>logs/nifi-status.log</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>./logs/nifi-status_%d.log</fileNamePattern>

<maxHistory>30</maxHistory>

</rollingPolicy>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>%date %level [%thread] %logger{40} %msg%n</pattern>

</encoder>

</appender>

.

.

.

<logger name="org.apache.nifi.controller.ControllerStatusReportingTask" level="INFO" additivity="false">

<appender-ref ref="STATUS_FILE" />

</logger>

So, I think what we want is already rolled for us by NiFi and all we need do is:

Today, I followed these steps to reporting task happiness—as an experiment. I removed all log files in order to minimize the confusion and see differences in less space to look through.

fgrep reporting *.log yields:

nifi-app.log: org.apache.nifi.reporting.ganglia.StandardGangliaReporter || org.apache.nifi.nar.NarClassLoader[./work/nar/extensions/nifi-standard-nar-0.6.1.nar-unpacked] nifi-app.log: org.apache.nifi.reporting.ambari.AmbariReportingTask || org.apache.nifi.nar.NarClassLoader[./work/nar/extensions/nifi-ambari-nar-0.6.1.nar-unpacked] nifi-user.log:2016-07-14 09:49:09,509 INFO [NiFi Web Server-25] org.apache.nifi.web.filter.RequestLogger Attempting request for (anonymous) GET http://localhost:8080/nifi-api/controller/reporting-task-types (source ip: 127.0.0.1) nifi-user.log:2016-07-14 09:49:09,750 INFO [NiFi Web Server-70] org.apache.nifi.web.filter.RequestLogger Attempting request (ibid) nifi-user.log:2016-07-14 09:49:26,596 INFO [NiFi Web Server-24] org.apache.nifi.web.filter.RequestLogger Attempting request (ibid)

Even after waiting minutes, I see no difference when running the grep. However, I launch vim on nifi-app.log and see, here and there, stuff like:

2016-07-14 09:48:50,481 INFO [Timer-Driven Process Thread-1] c.a.n.c.C.Processors Processor Statuses: ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ | Processor Name | Processor ID | Processor Type | Run Status | Flow Files In | Flow Files Out | Bytes Read | Bytes Written | Tasks | Proc Time | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ | Generate XML from advance dire | c396af51-2221-4bfd-ba31-5f4b5913b910 | VelocityTemplating | Stopped | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | 0 bytes (+0 bytes) | 0 bytes (+0 bytes) | 0 (+0) | 00:00:00.000 (+00:00:00.000) | | Get PDFs from inbox | 9929acf6-aac7-46d9-a0dd-f239d66e1594 | GetInbox | Stopped | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | 0 bytes (+0 bytes) | 0 bytes (+0 bytes) | 0 (+0) | 00:00:00.000 (+00:00:00.000) | | Put PDFs as in-box items | 5f28baeb-b24c-4c3f-b6d0-c3284553d6ac | PutInbox | Stopped | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | 0 bytes (+0 bytes) | 0 bytes (+0 bytes) | 0 (+0) | 00:00:00.000 (+00:00:00.000) | | Put generated XMLs to output f | 1af4a618-ca9b-4697-81b5-3ba1bbfaba6b | PutFile | Stopped | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | 0 bytes (+0 bytes) | 0 bytes (+0 bytes) | 0 (+0) | 00:00:00.000 (+00:00:00.000) | | Get PDF files from test fodder | 4435c701-0719-4231-8867-671db08dd813 | GetFile | Stopped | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | 0 bytes (+0 bytes) | 0 bytes (+0 bytes) | 0 (+0) | 00:00:00.000 (+00:00:00.000) | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ 2016-07-14 09:48:50,483 INFO [Timer-Driven Process Thread-1] c.a.n.c.C.Connections Connection Statuses: --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | Connection ID | Source | Connection Name | Destination | Flow Files In | Flow Files Out | FlowFiles Queued | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | 53e4a0e9-ce20-4e4d-84f3-d12504edeccc | Get PDF files from test fodder | success | Put PDFs as in-box items | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | 9 / 75.82 KB (+9/+75.82 KB) | | f3a032d6-6bd1-4cc5-ab5e-8923e131c222 | Get PDFs from inbox | success | Generate XML from advance dire | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | | e5ba7858-8301-4fd0-885e-6fb63b336e53 | Generate XML from advance dire | success | Put generated XMLs to output f | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

(I would figure out a little later that this is the start-up view before anthing's happened.)

<!-- Logger to redirect reporting task output to a different file. --> <logger name="org.apache.nifi.controller.ControllerStatusReportingTask" level="INFO" additivity="false"> <appender-ref ref="STATUS_FILE" /> </logger>

<!-- rolling appender for controller-status reporting task log. -->

<appender name="STATUS_FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>logs/nifi-status.log</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>./logs/nifi-status_%d.log</fileNamePattern>

<maxHistory>30</maxHistory>

</rollingPolicy>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>%date %level [%thread] %logger{40} %msg%n</pattern>

</encoder>

</appender>

~/dev/nifi/logs $ ll

total 76

drwxr-xr-x 2 russ russ 4096 Jul 14 10:28 .

drwxr-xr-x 13 russ russ 4096 Jul 14 09:37 ..

-rw-r--r-- 1 russ russ 65016 Jul 14 10:29 nifi-app.log

-rw-r--r-- 1 russ russ 3069 Jul 14 10:28 nifi-bootstrap.log

-rw-r--r-- 1 russ russ 0 Jul 14 10:28 nifi-status.log

-rw-r--r-- 1 russ russ 0 Jul 14 10:28 nifi-user.log

2016-07-14 10:34:06,643 INFO [Timer-Driven Process Thread-1] c.a.n.c.C.Processors Processor Statuses: ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | Processor Name | Processor ID | Processor Type | Run Status | Flow Files In | Flow Files Out | Bytes Read | Bytes Written | Tasks | Proc Time | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | Get PDF files from test fodder | 4435c701-0719-4231-8867-671db08dd813 | GetFile | Stopped | 0 / 0 bytes (+0/+0 bytes) | 6 / 50.54 KB (+6/+50.54 KB) | 50.54 KB (+50.54 KB) | 50.54 KB (+50.54 KB) | 2 (+2) | 00:00:00.056 (+00:00:00.056) | | Put PDFs as in-box items | 5f28baeb-b24c-4c3f-b6d0-c3284553d6ac | PutInbox | Stopped | 6 / 50.54 KB (+6/+50.54 KB) | 0 / 0 bytes (+0/+0 bytes) | 50.54 KB (+50.54 KB) | 0 bytes (+0 bytes) | 1 (+1) | 00:00:00.014 (+00:00:00.014) | | Generate XML from advance dire | c396af51-2221-4bfd-ba31-5f4b5913b910 | VelocityTemplating | Stopped | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | 0 bytes (+0 bytes) | 0 bytes (+0 bytes) | 0 (+0) | 00:00:00.000 (+00:00:00.000) | | Get PDFs from inbox | 9929acf6-aac7-46d9-a0dd-f239d66e1594 | GetInbox | Stopped | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | 0 bytes (+0 bytes) | 0 bytes (+0 bytes) | 0 (+0) | 00:00:00.000 (+00:00:00.000) | | Put generated XMLs to output f | 1af4a618-ca9b-4697-81b5-3ba1bbfaba6b | PutFile | Stopped | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | 0 bytes (+0 bytes) | 0 bytes (+0 bytes) | 0 (+0) | 00:00:00.000 (+00:00:00.000) | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 2016-07-14 10:34:06,644 INFO [Timer-Driven Process Thread-1] c.a.n.c.C.Connections Connection Statuses: -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | Connection ID | Source | Connection Name | Destination | Flow Files In | Flow Files Out | FlowFiles Queued | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | f3a032d6-6bd1-4cc5-ab5e-8923e131c222 | Get PDFs from inbox | success | Generate XML from advance dire | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | | e5ba7858-8301-4fd0-885e-6fb63b336e53 | Generate XML from advance dire | success | Put generated XMLs to output f | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | 0 / 0 bytes (+0/+0 bytes) | | 53e4a0e9-ce20-4e4d-84f3-d12504edeccc | Get PDF files from test fodder | success | Put PDFs as in-box items | 6 / 50.54 KB (+6/+50.54 KB) | 6 / 50.54 KB (+6/+50.54 KB) | 0 / 0 bytes (+0/+0 bytes) | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

What failed is getting the reported status into nifi-status.log. What succeeded is, just as was working before, getting controller status.

I got a response out of [email protected] that this was a reported bug and it's been

fixed in 0.7.0 and 1.0.0. Also note that %logging{40} is some log-back incantation to

affect the package name such that a name like

org.nifi.controller.ControllerStatusReportingTask becomes

o.n.c.ControllerStatusReportingTask. (See "Pattern Layouts" at

Chapter 6: Layouts.)

I upgraded my NiFi version to 0.7.0 at home and at the office.

I need to take time out to grok this.

${filename:toUpper()}

public static final PropertyDescriptor ADD_ATTRIBUTE = new PropertyDescriptor

.Builder()

.name( "Add Attribute" )

.description( "Example Property" )

.required( true )

.addValidator( StandardValidators.NON_EMPTY_VALIDATOR )

.expressionLanguageSupported( true )

.build();

Map< String, String > values = new HashMap<>(); FlowFile flowFile = session.get(); PropertyDescriptor property = context.getProperty( ADD_ATTRIBUTE ); values.put( "hostname", property.evaluateAttributeExpressions( flowFile ).getValue() );

values.put( "hostname", flowFile.getAttribute( property.getValue() ) );

Hmmmm... yeah, there's precious little written on this stuff that really corrals it. So, I wrote this (albeit non-functional) example using the information above plus what I already know about flowfiles, properties and attributes:

package com.etretatlogiciels;

import java.util.HashMap;

import java.util.Map;

import org.apache.nifi.components.PropertyDescriptor;

import org.apache.nifi.components.PropertyValue;

import org.apache.nifi.components.Validator;

import org.apache.nifi.flowfile.FlowFile;

import org.apache.nifi.processor.ProcessContext;

import org.apache.nifi.processor.ProcessSession;

import org.apache.nifi.processor.util.StandardValidators;

public class FunWithNiFiProperties

{

private static final PropertyDescriptor EL_HOSTNAME = new PropertyDescriptor

.Builder()

.name( "Hostname (supporting EL)" )

.description( "Hostname property that supports NiFi Expression Language syntax"

+ "This is the name of the flowfile attribute to expect to hold the hostname" )

.required( false )

.addValidator( StandardValidators.NON_EMPTY_VALIDATOR )

.expressionLanguageSupported( true ) // !

.build();

private static final PropertyDescriptor HOSTNAME = new PropertyDescriptor

.Builder()

.name( "Hostname" )

.description( "Hostname property that doesn't support NiFi Expression Language syntax."

+ "This is the name of the flowfile attribute to expect to hold the hostname" )

.required( false )

.addValidator( Validator.VALID )

.build();

// values will end up with a map of relevant data, names and values...

private Map< String, String > values = new HashMap<>();

/**

* Example of getting a hold of a property value via what the property value evaluates to

* including what not evaluating it as an expression yields.

*/

private void expressionLanguageProperty( final ProcessContext context, final ProcessSession session )

{

FlowFile flowFile = session.get();

PropertyValue property = context.getProperty( EL_HOSTNAME );

String value = property.getValue(); // (may contain expression-language syntax)

// what's our property and its value?

values.put( EL_HOSTNAME.getName(), value );

// is there a flowfile attribute of this precise name (without evaluating)?

// probably won't be a valid flowfile attribute if contains expression-language syntax...

values.put( "hostname", flowFile.getAttribute( value ) );

// -- or --

// get NiFi to evaluate the possible expression-language of the property as a flowfile attribute name...

values.put( "el-hostname", property.evaluateAttributeExpressions( flowFile ).getValue() );

}

/**

* Example of getting a hold of a property value the normal way, that is, not involving

* any expression syntax.

*/

private void normalProperty( final ProcessContext context, final ProcessSession session )

{

FlowFile flowFile = session.get();

PropertyValue property = context.getProperty( HOSTNAME );

String value = property.getValue(); // (what's in HOSTNAME)

// what's our property and its value?

values.put( HOSTNAME.getName(), value );

// is there a flowfile attribute by this precise name?

values.put( "hostname-attribute-on-flowfile", flowFile.getAttribute( value ) );

}

}

This is all I think I know so far:

Yesterday, I finally put together a tiny example of a full, custom NiFi project minus additionalDetails.html—for that, look in the notes on the present page). See A simple NiFi processor project.

Here's one for your gee-whiz collection: if you do

public void onTrigger( final ProcessContext context, final ProcessSession session ) throws ProcessException

{

FlowFile flowfile = session.get();

.

.

.

someMethod( session );

...NiFi has removed the flowfile from the session's processorQueue and a subsequent call:

void someMethod( final ProcessSession session )

{

FlowFile flowfile = session.get();

...will produce a null flowfile. This is an interesting side-effect.

In what situation would onTrigger() get called if there is no flowfile?

This can happen for a few reasons. Because the processor has...