Russell Bateman

January 2015

last update:

Every development paradigm of any value provides one or more test frameworks capable of being used to develop a product via TDD. I have used Java and Python in this way.

Why test?

So, ...

Test first, code second!

TDD isn't about testing, it's about designing software so that it is testable and understandable. Doing TDD, much less time is spent debugging code because tests find problems in the code before they can become defects. Less time is spent reading and interpreting requirements because the test code allows for building concrete examples that, upon inspection, either agree with the product definition or do not agree. This is more straightforware than trying to write production code from abstract requirements. Later, tests give confidence that, when forced to refactor or enhance production code, the result still works correctly. There is no way to over-estimate the value of that confidence.

Before being able to write tests for features, as described in this page, one must necessarily have knowledge of those features and also their expectations. This implies an even earlier phenomenon of "Documentation-driven Design."

From the perspective of an end-user, if a feature is not documented, then it does not exist. From the developer's perspective, if a feature is not documented, then how does he or she know to make it the object of a test case, then drive development from the success or failure of that test case? Furthermore, if a feature is documented incorrectly, confusingly, inadequately or incompletely, then it is broken: the documentation is broken, the test case is broken and development is broken.

Documentation in product design is therefore not an after-thought or something to "get around to." It must be done up-front, in an organized, orderly and accurate way. A few notes on a napkin, in a slide presentation deck or on an Excel spreadsheet do not constitute adequate and appropriate product design, let alone documentation, let alone being the basis of test cases and, especially, the object of any development.

Documentation should be reviewed and, once written, continually polished along-side the testing and development process. Ideally, if functionality becomes misaligned with documentation, tests should fail. But, this would be in a perfect world, in a shop big enough to have people focused on documentation, focused on writing and maintaining tests and focused on development though the latter two should be very closely tied together when not done by the same people.

At very least, genuine software developers have the professional obligation, and owe it to their employer, to back all their code with tests that prove the product, safeguard it against degradation and demonstrate both its functionality as well as serve as documentation to new developers coming on board who don't know it.

In short, when a feature is changed, test cases should change or be added while others should be obsoleted if they represent no-longer valid functionality.

But, on with our subject: Test-driven Development (TDD)!

It's best for the developer to concentrate on the problem space rather than the solution space.

TDD is proactive.

No TDD is reactive.

By writing test first, you write code that is more highly maintainable than if you just wrote the code to solve the problem. Writing a class to use in production that can be tested too forces you to think in other ways than just solve the problem. Barring certain mistakes (see further down), the result will be better code.

By testing first, you are forced to develop something akin to a specification. The specification of what the code does is made clear by the test written to ensure it does it.

If I can't write a test for the solution or functionality I'm working on, then I surely do not understand what I'm about to do. If I stab around prototyping the solution without writing one or more tests for it, how do I know what it was really supposed to do or that it really does it.

You know how documentation—specifications—never seem to reflect what the product is doing or how it works because changes never seem to get reflected in documents?

Nailed.

Your test(s) will often begin to fail if you change the code to do something new or different.

I can't count the times my Mockito tests have saved my bacon at the end of a grand refactoring by showing me immediately how I broke something. I'm less afraid to make need changes to code that's completely covered by tests.

Robert C. Martin said:

Software is a remarkably sensitive discipline. If you reach into a base of code and you change one bit, you can crash the software. Go into the memory and twiddle one bit at random, and very likely, you will elicit some form of crash. Very, very few systems are that sensitive. You could go out to [a bridge], start taking bolts out, and [it] probably wouldn't fall. [...]

Bridges are resilient—they survive the loss of components. But software isn't resilient at all. One bit changes and—BANG!—it crashes. Very few disciplines are that sensitive.

But there is one other [discipline] that is, and that's accounting. The right mistake at exactly the right time on the right spreadsheet—that one-digit error can crash the company and send the offenders off to jail. How do accountants deal with that sensitivity? Well, they have disciplines. One of the primary disciplines is dual-entry bookkeeping. Everything is said twice. Every transaction is entered two times—once on the credit side and once on the debit side. Those two transactions follow separate mathematical pathways until they end up at this wonderful subtraction on the balance sheet that has to yield [...] zero.

This is what test-driven development is: dual-entry bookkeeping. Everything is said twice—once on the test side and once on the production code side and everything runs in an execution that yields either a green bar or a red bar just like the zero on the balance sheet. It seems like that's a good practice for us: to [acknowledge and] manage these sensitivities of our discipline.

Some best practices. An HP colleague posited this a long time ago. I'm not sure I agree in every respect. Mockito has come along since. Etc. Expect a lot of revision here.

A TDD approach lends itself to coding using SOLID principles.

A method or class should be responsible for one task, and one task only. This leads to smaller methods which are easier to understand, have fewer lines of code and fewer bugs and side effects.

A good unit test will test one method or one unit of work. In order for this to work well the method must do one thing well or have a single responsibility.

The Open/Closed principle, at the method level, suggests that the method you are testing should be open to bug fixes but closed to modification. If your method is doing more than it should to fulfill it's responsibilities then there is a greater chance of higher coupling and side effects.

The idea behind the Liskov Substitution principle is that it should be possible to substitute different subclasses of a class without a consumer of the base class needing to know about the substitution.

When a TDD approach is taken, artifacts such as queues and databases should have test doubles to shorten the code-test-fix cycle, which means subclasses are more likely to follow the LSP.

Interface segregation refers to creating tightly focused interfaces so that classes which implement the interface are not forced to depend on parts of the interface they do not use. This is single responsibility again but from an interface point of view, and as with single responsibility for classes and methods a unit test will be more effective when only the salient part of the interface is included in the test.

Having a cohesive interface, much like having a cohesive class, leads to a much cleaner contract and less likelihood of bugs and side effects.

Let's say you have object A and object B. Object A depends on object B to work, but rather than creating object B within object A, you pass a reference to object B into object A's constructor or a property of object A. This is dependency injection.

When coding using TDD you will often want to create any dependent objects outside of the class you are testing. This allows you to pass different types of test double into the object under test as well as real objects when the class has been integrated into an application.

http://java.dzone.com/articles/test-driven-development-three

Here's an interesting article and video: https://dzone.com/articles/tdd-getting-started-video. It inspires me to follow it up with my own notes here. I'm mostly in agreement with what the author says and does. The video demonstrates considerably more sophistication in the implemenation of the subject under test (Calculator) by conjecturing a source for numbers (in lieu of two arguments). He does this in order to create a need to introduce Mockito, which I also use in the same way he does. I think Mockito is a spectacularly useful and wonderful thing. However, I'm here to talk about TDD and get the point home in a simpler example. For Mockito, please see my Notes on Mockito page.

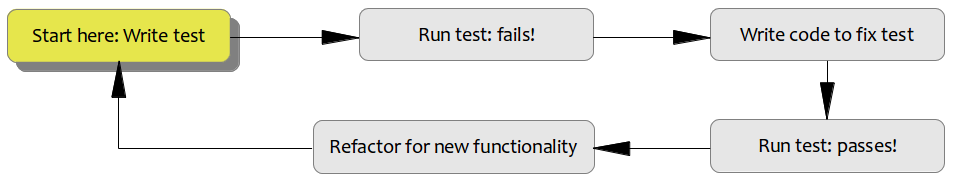

Ordinarily, one writes unit tests according to these steps:

That is, that the objects are defined (arranged) at the top of the code, whether as private, private static, or at the top of the test case method.

Then, we make calls to or otherwise act upon those objects in imitation to how they'd be used in production code.

Last, we assert against the state of the result, the object's values, etc.

In a TDD methodology, this could be considered almost upside-down. What we really want to do is start at the end—by asserting the features and functionality of our product. I think I'm making this a bit clearer than this inspiring author:

We create the unit-test class and a test case. Once the basic code skeleton is implemented, we don't define our objects, but immediately write assertions against the state we want those (future) objects to be in at the end of execution.

This means that our code will exhibit errors (missing symbols) which is exactly what we want.

Next, we repair the errors by creating the object(s) involved in the assertion.

Last, we define our classes and objects in such a way as to solve the problem that is creating our product.

package com.etretatlogiciels.tdd.example;

import org.junit.Test;

import static org.junit.Assert.assertTrue;

public class CalculatorTest

{

@Test

public void addTwoNumbers()

{

assertTrue( "The addition failed", answer == 3 );

}

}

@Test

public void testAddTwoNumbers()

{

int answer;

assertTrue( "The addition failed", answer == 3 );

}

@Test

public void testAddTwoNumbers()

{

Calculator calculator = new Calculator();

int answer;

assertTrue( "The addition failed", answer == 3 );

}

private static int A = 1, B = 2, A_PLUS_B = 3;

@Test

public void testAddTwoNumbers()

{

Calculator calculator = new Calculator();

int answer = calculator.addTwoNumbers( A, B );

assertTrue( "The addition failed", answer == A_PLUS_B );

}

If our cursor is on addTwoNumbers() above (in the test code), we're using IntelliJ IDEA and we hold down ALT and press ENTER, we'll get this new class filled out roughly like this:

package com.etretatlogiciels.tdd.example;

public class Calculator

{

public int calculator.addTwoNumbers( int a, int b )

{

return 0;

}

}

package com.etretatlogiciels.tdd.example;

public class Calculator

{

public int calculator.addTwoNumbers( int a, int b )

{

return a + b;

}

}

This is how we incrementally add features and functionality to our product. We're prompted by the failures of tests we write that demonstrate what our product does to fix those failures making our tests work.

What benefits do we have at the end of this exercise?